Originally published on InfoQ

Being in the tech industry, it’s quite common to come across jargon such as cloud computing, containers, serverless frameworks, etc. But what is cloud computing?

How does a container work? And how can a function be serverless?

This post will try to decode these technologies and explore how developers should consider containers or serverless functions within their tech stack. For example, if your application has a longer startup time, then a container would suit the need better. Highly efficient stateless functions that need to scale up and down massively would benefit from running serverless functions. How Does a Container Work? A container is a packaged application that contains code along with the necessary libraries and dependencies, which can be executed in any environment, irrespective of the operating system. It helps developers build, ship, deploy, and scale applications easily.

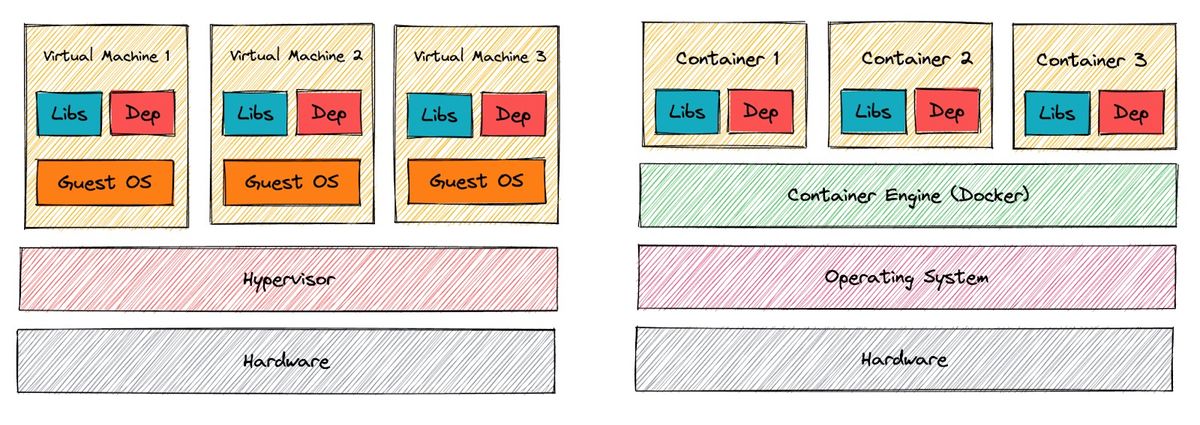

Previously, when containers were not that popular, developers used to deploy applications in separate virtual machines to achieve isolation. Each virtual machine had a guest operating system that required some definite CPU and memory from the physical hardware. This caused virtual machines to use up resources that were necessary for the applications. With the adoption of containers, a guest OS is no longer required since the container engine can share the physical operating system with one or more containers. This is a huge benefit over a virtual machine, as more resources can now be dedicated to the apps. At a fundamental level, a container image is created by the developer that contains instructions about how the container should run. This container image can then be used to spin up containers that run the actual application. It contains executable code that can run in wholly isolated environments. The libraries and dependencies for the app are also packaged in the image definition. Docker, Amazon ECS, Kubernetes, and GCP Autopilot are major containerization platforms.

What Are Serverless Functions?

As the name suggests, serverless computing is a paradigm in computer science where developers do not manage the servers themselves. Instead, a third party provides services to manage the server so that developers can focus more on application logic rather than on the maintenance of servers running the app. Serverless Functions, in general, are a further abstraction from containers and under the hood; both use the same underlying technology.

How Does Serverless Computing Work?

Serverless computing is not possible without using actual servers—it’s just that developers don’t need to interact with them because vendors, often a cloud provider like AWS, Azure, or GCP, take on server management. Frequently, there are containers underneath Serverless infrastructure. In AWS’s case, they open-sourced their Rust project that handles the instantiation of the functions (firecracker) and the lightweight operating system they use for the nodes hosting the containers (bottlerocket). In this paradigm, developers are provided with an environment where they can write and submit their code. The platform takes care of the execution, allocating physical memory, CPU, and output. The user is then charged for the duration the application runs and for the memory and CPU consumption. This is a huge benefit, as it greatly reduces costs compared to running or managing your own infrastructure. An important point to note is that serverless functions also leverage containers under the hood, which is abstract from developers. When you start a serverless function, the cloud provider spins up a container in which the application is executed. Thus, the underlying technology is similar. However, the way environments are deployed, scaled, and utilized are different.

Fundamental Differences Between Containers and Serverless

Below, you can see some key differences between containers and serverless functions.

| Containers | Serverless Functions | |

|---|---|---|

| Environment | Users have complete control over underlying infrastructure such as VM and OS; users must manage updates and patching. | The cloud provider manages the underlying infrastructure; users don’t have to handle infrastructure or patching. |

| Scalability | Containers can be scaled using the Container Orchestration platforms, such as Kubernetes, ECS, etc., based on the workload, with a minimum and a maximum number of containers configured. | Since serverless functions are more abstract, there’s minimal configuration to be provided compared to containers. Although, there are some limits that you can define on how many concurrent invocations are permitted to avoid throttling. |

| Startup time | Containers are always running applications, so there is less startup time. | Serverless follows the concept of cold and hot starts, meaning a start will take longer if the app is idle for longer. The application is hibernated or stopped when not used for a long duration, hence, the longer startup time. |

| Operating costs | Users are billed based on the duration of usage—a pay-as-you-go model. | Users are billed based on the duration, memory, and compute for which the application runs. The more the memory, the CPU, and the application run, the higher the costs. |

Use Cases

There are several everyday use cases for containers and serverless computing. Some solutions can be achieved by either using containers or serverless functions. Still, the major difference is how both containers and serverless functions are priced, integration with other applications and services within the cloud, and their ability to scale based on the load.

For example, if your application is a short-lived process that looks up a geographic location based on IP addresses, it can be serverless. As the number of requests increases, the serverless function will scale up automatically without any manual intervention. If the application is a web application that requires it to stay up and running for long periods of time, then a container might suit best.

Also, processes that need faster access to storage can be accommodated using containers as they can be integrated with file systems. An example is integrating the containers running on Amazon ECS that can use highly scalable EFS or Azure Files for storage purposes.

The following decision tree might be helpful to decide between choosing a container or a serverless function.

/filters:no_upscale()/articles/containers-serverless-rivals-cohorts/en/resources/3image-2-1675790015317.jpeg)

Although there can be an overlap between the two, some of the common use cases of containers and serverless functions are discussed below.

Containers

Programing language support

When you package your application in a container, it becomes platform-independent. Serverless Functions support a few of the most common runtime environments like Java, Python, Go, etc. However, if the application programming language is not supported by the Serverless Function’s runtime, then containers would be an ideal solution. The container can be deployed using any container orchestration platform, such as Kubernetes.

Hosting long-lived applications

Containers make it easier to set up long-lived web applications that need to always run as a service. For example, a tracking application that tracks user behavior on a website can be deployed via containers. Based on the generated events, the container can be scaled up or down. Containers can also be orchestrated by leveraging popular technologies such as Kubernetes, AWS Fargate, etc.

Serverless

API endpoints Organizations can use a serverless application to deploy API endpoints for a web or mobile server. These APIs can be stateless and short-lived, which can be triggered based on an event.

IoT processing

With the increase in home and industrial automation, the use of IoT devices has increased significantly. These IoT devices can leverage the power of serverless computing by running functions when triggered.

Event streaming

In a real-time event-streaming scenario, where events need to be enriched or filtered based on certain conditions, developers can use short-lived serverless functions. You can then use these functions to check if an event is valid, enrich events by looking up demographic information, etc.

Final Verdict

Both containers and serverless applications are cloud-agnostic tools that benefit developers. As a rule of thumb, containers provide isolation and flexibility, whereas serverless aids development and helps you autoscale with minimum runtime costs.

Choosing to go with containers versus serverless applications depends on the use case. For example, a serverless framework would be better if the requirement is to build an API server that serves fast, short-lived responses. On the other hand, if the application needs always to be available and up and running, a containerized application is the way to go.